Apple integrated a new AI-powered tool dubbed “Visual Intelligence” on its latest launched iPhone 16 series, which allows users to scan the surroundings around them through the iPhone’s camera to identify objects and get information regarding them.

While this visual intelligence appears to be a standalone feature, it might be a key step towards Apple’s much-anticipated augmented reality (AR) glasses.

Read more: Meta Quest 3S might get new ‘Action button’ to quickly switch between modes

What is Visual Intelligence on iPhones?

Visual Intelligence can recognise and provide information on various items in real-time. For instance, users will be able to identify objects such as animals, historical places, popular figures, and more. Users can also scan a restaurant with their iPhone to learn more about it.

Apple’s augmented reality (AR) glasses

If visual intelligence kind of technology gets integrated into AR glasses, it could work effortlessly, allowing users to gather information without having to use their phones, meaning just by looking at the restaurant and asking the glasses for details.

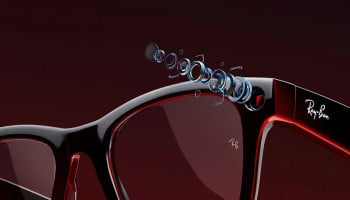

Previously, Meta explored a similar concept with its own computer glasses, which proved that AI assistants in eyewear can work effectively.

Though Apple users already have the option of Vision Pro, a spatial computer equipped with multiple cameras, it’s not something they would want to wear outside as they are heavyweight and not easy to wear in everyday settings.

Notably, Apple has no plans to hit the market with Apple AR glasses until 2027 or later, still, such features could eventually become the foundation and help the company to work on the potential technology.