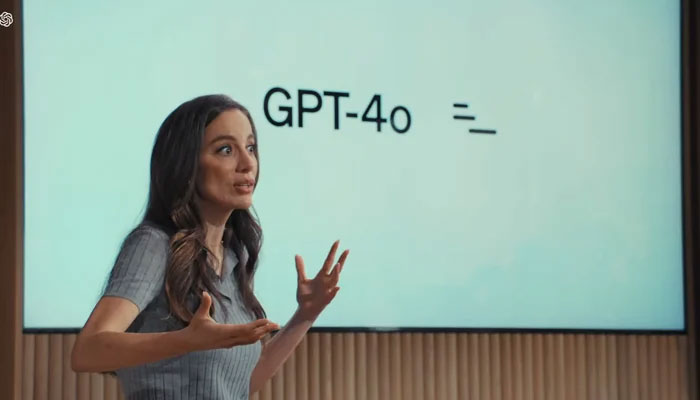

OpenAI, the San Francisco-based artificial intelligence (AI) research company backed by Microsoft, finally launched its highly anticipated GPT-4o on Monday. This new AI-powered chatbot boasts the ability to detect human emotions, marking a significant step forward in OpenAI's efforts to expand its popular ChatGPT service.

The GPT-4o can perceive emotions through real-time cameras, according to OpenAI. Demo videos showcasing the technology have sparked concerns among some users who believe it might threaten various jobs. However, experts remain cautious, suggesting that the Monday presentation might not reflect the full capabilities of the product.

The latest version of GPT-4o offers an audio interface, allowing users to interact with the chatbot through voice commands. It can answer questions, provide translations, and even analyse surroundings using a camera to identify locations. OpenAI's website features demo videos where employees, including chief technology officer Mira Murati, interact with GPT-4o by issuing various commands.

While the potential of GPT-4o is undeniable, some experts urge caution. Ben Leong, a computer science professor at the National University of Singapore, believes it's premature to make definitive judgements. He highlights the challenges AI still faces in replacing human roles in areas like customer support, teaching, and negotiation.

Simon Lucey, director of the Australian Institute for Machine Learning at the University of Adelaide, points to ongoing limitations. Even in its later versions, ChatGPT has exhibited errors when performing basic calculations like multiplication of three-digit numbers. Lucey emphasizes the continued need for human oversight, stating, "At the end of the day, you still need a human to cast judgment over what it's producing."

Despite these concerns, the launch of GPT-4o represents a significant advancement in AI technology. As OpenAI continues to refine its capabilities, the potential impact on various aspects of our lives remains a topic of ongoing debate.